DeepSeek AI is a powerful AI model that you can run locally on your desktop. This guide provides step-by-step instructions to install and set up DeepSeek AI using Ollama. Learn how to install, set up, and run DeepSeek-R1 locally with Ollama and build a simple RAG application.

In this tutorial, I’ll explain step-by-step how to run DeepSeek-R1 locally and how to set it up using Ollama. We’ll also explore building a simple RAG application that runs on your laptop using the R1 model, LangChain, and Gradio.

Why Run DeepSeek-R1 Locally?

Running DeepSeek-R1 locally gives you complete control over model execution without dependency on external servers. Here are a few advantages to running DeepSeek-R1 locally:

- Privacy & security: No data leaves your system.

- Uninterrupted access: Avoid rate limits, downtime, or service disruptions.

- Performance: Get faster responses with local inference, avoiding API latency.

- Customization: Modify parameters, fine-tune prompts, and integrate the model into local applications.

- Cost efficiency: Eliminate API fees by running the model locally.

- Offline availability: Work without an internet connection once the model is downloaded.

Setting Up DeepSeek-R1 Locally With Ollama

Ollama simplifies running LLMs locally by handling model downloads, quantization, and execution seamlessly.

Step 1: Install Ollama

First, download and install Ollama from the official website.

Once the download is complete, install the Ollama application like you would do for any other application.

Step 2: Download and run DeepSeek-R1

Let’s test the setup and download our model. Launch the terminal and type the following command.

ollama run deepseek-r1Ollama offers a range of DeepSeek R1 models, spanning from 1.5B parameters to the full 671B parameter model. The 671B model is the original DeepSeek-R1, while the smaller models are distilled versions based on Qwen and Llama architectures. If your hardware cannot support the 671B model, you can easily run a smaller version by using the following command and replacing the X below with the parameter size you want (1.5b, 7b, 8b, 14b, 32b, 70b, 671b):

ollama run deepseek-r1:XbWith this flexibility, you can use DeepSeek-R1’s capabilities even if you don’t have a supercomputer

Step 3: Running DeepSeek-R1 in the background

To run DeepSeek-R1 continuously and serve it via an API, start the Ollama server:

ollama serveUsing DeepSeek-R1 Locally

Step 1: Running inference via CLI

Once the model is downloaded, you can interact with DeepSeek-R1 directly in the terminal.

Step 2: Accessing DeepSeek-R1 via API

To integrate DeepSeek-R1 into applications, use the Ollama API using curl:

curl http://localhost:11434/api/chat -d '{

"model": "deepseek-r1",

"messages": [{ "role": "user", "content": "Solve: 25 * 25" }],

"stream": false

}'curl is a command-line tool native to Linux but also works on macOS. It allows users to make HTTP requests directly from the terminal, making it an excellent tool for interacting with APIs.

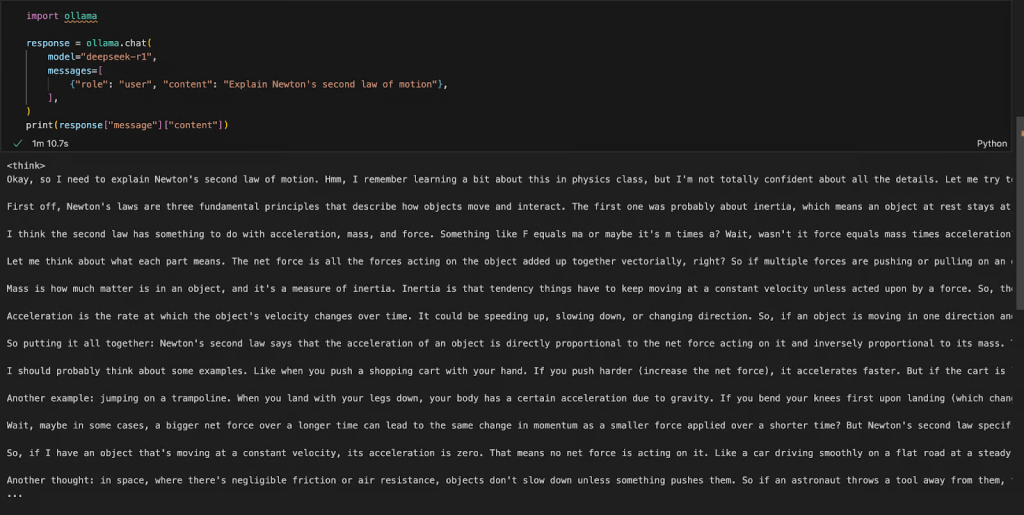

Step 3: Accessing DeepSeek-R1 via Python

We can run Ollama in any integrated development environment (IDE) of choice. You can install the Ollama Python package using the following code:

!pip install ollamaOnce Ollama is installed, use the following script to interact with the model:

import ollama

response = ollama.chat(

model="deepseek-r1",

messages=[

{"role": "user", "content": "Explain Newton's second law of motion"},

],

)

print(response["message"]["content"])

The ollama.chat() function takes the model name and a user prompt, processing it as a conversational exchange. The script then extracts and prints the model’s response.

Conclusion

Running DeepSeek-R1 locally with Ollama enables faster, private, and cost-effective model inference. With a simple installation process, CLI interaction, API support, and Python integration, you can use DeepSeek-R1 for a variety of AI applications, from general queries to complex retrieval-based tasks.